Highly Available workloads across Mulitple Kubernetes Clusters

Our Journey begins in 2003 were I somehow blagged a Unix role, largely due to experience with FreeBSD and sparse access to a few Unix systems over the years. This first role consisted of four days and twelve hours per shift, where we were expected to watch for alerts and fix a myriad of different systems ranging from:

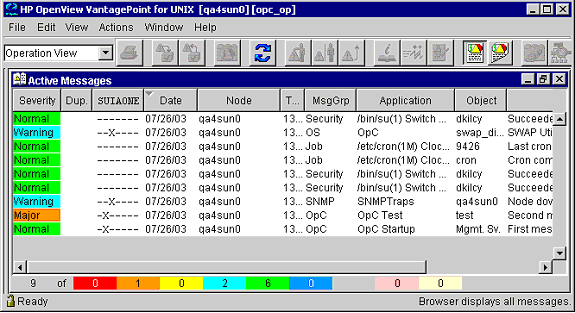

Every alert in HP Openview (which you can see in the screenshot below) would lead down a rabbit hole of navigating various multi-homed jump boxes in order to track down the host that had generated the alert.

Once we finally logged onto the machine we were often presented with a obscure version of Unix, a completely random shell or a user-land set of tooling that was incomprehensible.. it was ace.

Typically in this environment the architecture was 1:1, as in one server would host one big application. Although there were some applications that had strict demands and in some cases penalties in the event of downtime, it was these applications that would often make use of various clustering technologies of the time in order to provide high availability. Given the infrastructure was a complete hodgepodge of varying systems it would stand to reason that the clustering software would follow suit, this meant that we were presented with systems such as:

High Availability

As mentioned above in this place of work some applications simply weren’t allowed to fail as there would be a penalty (typically per minute of downtime charges) so for these applications a highly available solution is required. These solutions are to keep an application as available to end users as possible, so in the event an application crashes then it’s the clustering softwares job to restart it. Although you could create your own with a bash script:

1 | #!/bin/bash |

So restarting the program to ensure availability is one thing, but what about things such as OS upgrades or hardware failures? In those use-cases then the application will cease to run as the system itself will be unavailable. This is where multiple systems are clustered together in order to provide a highly available solution to any downtime, when a system becomes unavailable then it’s the clustering softwares role to make a decision about how and where to restart the application. A lot of my time was fighting with Sun Cluster and how it would implement high availability 🫠

Implementing High Availability (back in the day)

In these systems there were a number of pre-requisites in order for high availability to work

Shared storage

If the application had persistent data, and they pretty much all were based upon Oracle databases back then then this underlying storage needed to be shared. This is so that in the event of a failure the storage can be mounted on the node that is selected to take over the workload.

Re-architecting the application

This doesn’t technically mean re-writing the application, it means writing various startup and shutdown scripts with logic in them in order to ensure that the clustering software can successfully complete them without them ending in a failed state.

Networking

If the application was moving around during a failover then external programs or end users still needed to access it with the same address/hostname etc.. so in the event of a failover a virtual IP (VIP) and hostname will typically be added to the node where the application is failing over to.

Quorum

In order for a node to become the chosen one in the cluster a quorum device should be present in order to ensure that a decision can be made about who will takeover the running of applications, and in the case of a network connection failure between nodes that a “split brain” scenario can’t occur.

Split Brain will occur when cluster nodes can no longer communicate, leading them to believe that they’re the only nodes in the cluster and thus voting for themselves in order to become the leader. This would lead to multiple nodes all accessing the same data or advertising conflicting IP addresses, and generally causing chaos.

Did HA work?

Sometimes

One evening I was asked to lead a large planned change to upgrade the hardware on a mighty Sun e10K that was being used to host arguably one of the most important applications within the entire company. The plan was relatively straight forward:

- Log into node1, check it was primary and running the application.

- Log into node2, ensure its health (hardware/disk space etc..)

- Initiate a failover on node1

- Watch and ensure services came up on node2 and validate the application was healthy and externally accessible

- Upgrade hardware on node1

- Check node1 is healthy again (after upgrade)

- Initiate a failover on node2, back to node1

- Realise something is wrong

- Panic

- Really panic

- Consider fleeing to a far flung country

So what went wrong?

The application failover from node1->node2 went fine, we saw the application shutdown followed by the shared storage being unmounted and finally the network information removed from node1. Then on node2 we witnessed the storage being mounted, the networking details being applied followed by the application being restarted. We even had the applications/database people log in and watch all the logs to ensure that everything came up correctly.

When we failed back things went very wrong, the first application/storage/network all moved back however the second application stopped and everything just hung. Eventually the process excited with an error about the storage being half remounted. The app/database people jumped onto the second node to see what was happening with the first application whilst we tried to work out what was happening. Eventually we tried to bring everything back to node2 where everything was last running successfully and again the application stopped and the process timed out about the storage 🤯

At this point we had a broken application spread across two machines trying to head in opposite directions but stuck in a failed state, at this point various incident teams were being woken up and various people started prodding and poking things to fix it.. this went on for a few hours before we worked out what was going on. (Spoiler it was step 4)

So this change was happening in the middle of the night, meaning that ideally no-one should really be using it or noticing it not working for the “momentary” downtime. One of the applications team was had opened a terminal and had changed directory to where the application logs where (on the shared storage) in order to watch and make sure the application came up correctly. However, this person then went to watch TV or get a nap (who knows) leaving their session logged on and living within the directory on the shared storage. When it came to failing the application back the system refused to unmount the shared storage as something was still accessing the filesystem 🤬 .. even better when we tried to bring the other half of the application back it failed because someone was looking at the database logs when it attempted to unmount the shared storage for that 🫠

I think it was this somewhat stressful experience that weirdly made me want to learn more about Sun cluster and other HA solutions, and here we are today :-D

High Availability in Kubernetes

Much like that script I embedded above, HA is endlessly performed by Kubernetes typically referred to as the “reconciliation loop”. The reconciliation loops role is largely to compare expected state and actual state and reconcile the difference, so expected state if 3 pods and there is only 1 then schedule 2 more etc. Additionally within the Kubernetes cluster (actually it comes from etcd but 🤷🏼♂️) is the concept of leader election, which allows things running within the cluster to use this mechanism to elect a leader amongst all participants. This mechanism allows you to have multiple copies of an application running and with a bit of simple logic ensure that only the active/leader instance is the one that is actually doing the processing or accepting connections etc.